The recent launch of DIMES, the Rockefeller Archive Center’s (RAC) new system for archival discovery and delivery, is now a month behind us. Having now had a moment to breathe and reflect, I’d like to share some details about the RAC’s collaborative user experience (UX) research and testing strategies for this project, and how those strategies connect to our broader goal to operationalize user experience (UX) practice at the Rockefeller Archive Center.

Expanding expertise to enable new strategies

In the development of DIMES as part of the Rockefeller Archive Center’s Project Electron initiative, we have always centered RAC staff from the various teams (Reference, Processing, Collections Development, Research and Education, IT, and Digital Strategies) as a user group whose labor and expertise are valuable. Staff use our systems, and they also support researchers who use our systems. Because of this, it has been essential to build things with our colleagues to incorporate their knowledge and processes into the products.

In this blog and beyond, we’ve previously shared details about the participatory design activities and user research projects that our staff have engaged in, including card sorting exercises, persona development, scenario and site mapping activities, collaborative data modeling, wireframe review, usability testing studies (for many projects including our Aurora app testing and RAC Documentation Site testing), and the creation of service design blueprints for archival duplication and retrieval processes. Each of these activities has served to incorporate staff perspectives AND develop UX expertise amongst the staff, building organizational capacity for a more mature incorporation of UX processes and methods into the DIMES project work.

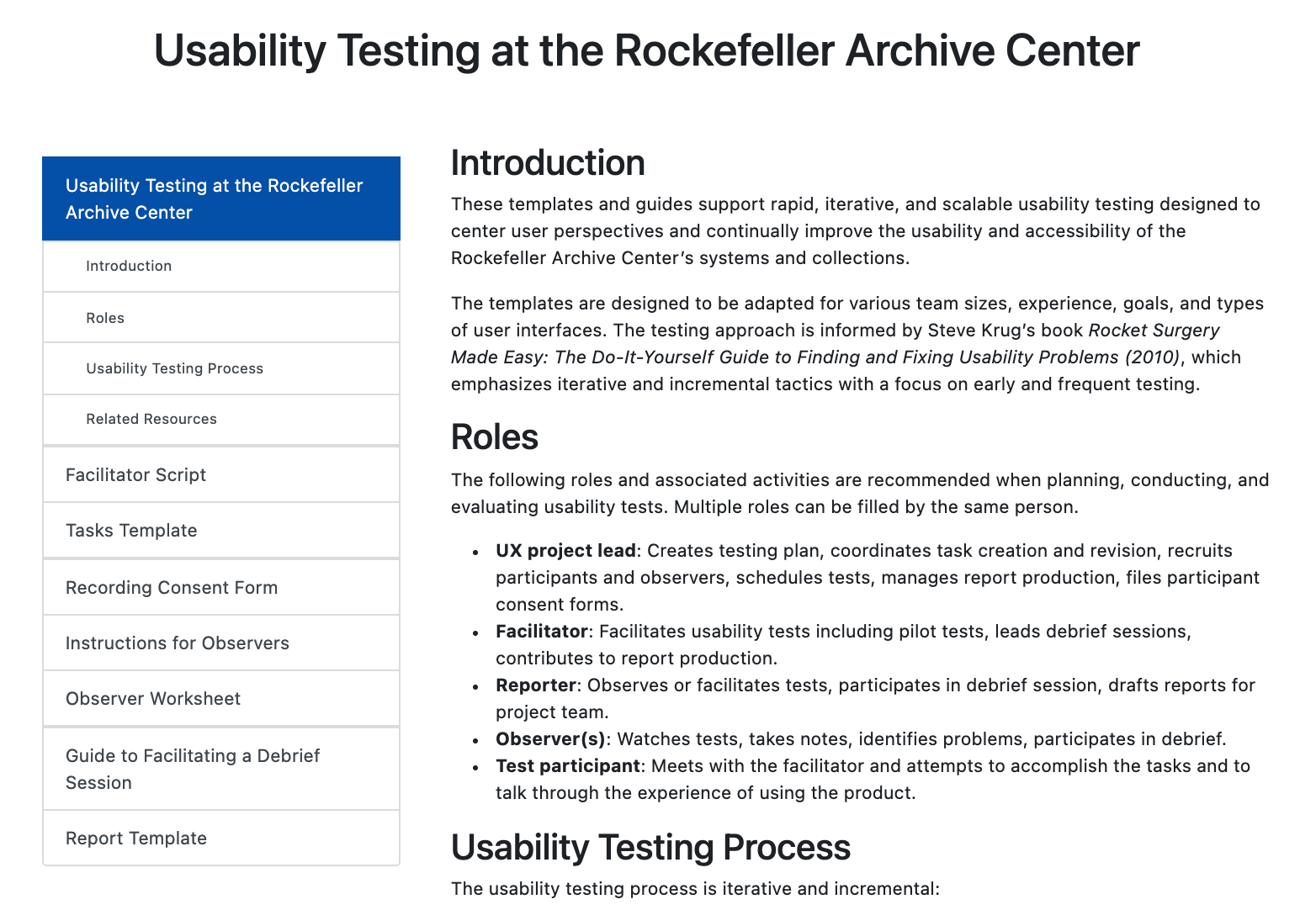

Specifically, our expanded capacity enabled us to execute six specific strategies as part of the DIMES project work, combining and incorporating these strategies into a programmatic approach that was new for us:

- Develop and use a set of usability testing guides and templates to standardize our testing approach and train practitioners.

- Test website design prototypes with users before writing any code.

- Conduct lightweight, iterative rounds of usability testing with researchers during site development instead of after the site is built.

- Recruit usability testing participants from a variety of contexts and perspectives instead of limiting recruitment to researchers working on-site in our Reading Rooms. We included users with different educational backgrounds, jobs, first languages, experiences using (or not) our archival discovery and request systems, and experiences (or not) conducting archival research.

- Form an “Observers Team” of four staff stakeholders from each RAC program area to observe usability tests and meet to evaluate the results.

- Report observed usability issues and resulting fixes to an advisory group of RAC Reference Archivists after each round of usability testing, serving to incorporate insights and domain knowledge from these key stakeholders, demonstrate the value of testing with users, and encourage staff buy-in.

The DIMES UX testing process

Designs and prototypes

We worked with a design agency, ondesign, to create the DIMES website. We shared some rudimentary wireframes, information architecture concepts, and user research insights with the ondesign team, and they developed a new set of wireframes representing two design options using Invision. I tested these wireframes with researchers and staff to help determine which option to pursue, using a simple “can-I-bother-you-for-15-minutes?” method to quickly gather initial impressions from users. With a design direction established, ondesign produced a set of high-fidelity design treatments that I also tested with users and reviewed with members of our Reference staff.

Incremental and iterative usability testing

After multiple design iterations, we were ready to write some code to be able to test the site with real data from our collections. We built the archival request features first, which consist of a page to manage, email, and download a list of saved items, request copies of records, or request records in the Reading Room. Because the request features encompass the most complex aspects of the application, starting with just those discrete functions allowed us to study the usability of those features with users while continuing to build other aspects of the system. We iteratively built, tested, made changes, tested the changes, and expanded the usability study scope in each round of testing to include new tasks to target features of the site as they were built.

Overall, I conducted three rounds of usability testing consisting of three tests (plus a pilot test) in each round. The first round focused on the “My List” page and request forms; the second covered site search functions and included retesting of changes made to the request features based on the first round; and the final round focused on search and discovery more broadly including site navigation and accessing digital content in the IIIF viewer. Because we are using the Mirador IIIF viewer, I connected with colleagues at Stanford Libraries who had previously completed some usability studies of Mirador, and who generously shared their insights and task ideas to focus our study.

COVID-19 hit just as we were starting to write code, so while the early tests were in-person, I pivoted to using Zoom with screen share to conduct the three rounds of usability tests during this period. This worked well because I could open the site, which was not yet deployed on a server, locally on my computer while still allowing the participant to take control. Remote testing also allowed me to include a much broader group of users in multiple countries.

Evaluation and reporting

After each round, an “Observers Team” representing each of the RAC Program Areas watched recording of the tests and met with me to discuss the results, bringing in perspective from across the organization. The lead DIMES developer, Hillel Arnold, also watched the recordings, and we met to determine how to fix the issues and which features needed further testing. We used GitHub to manage the issues. After making changes to the site in each round, we reported the testing results and site progress to a group of RAC Reference staff.

What We Learned

As a major project representing the culmination of some significant organizational shifts, the development of DIMES has been an opportunity to try new strategies and methods. I’d like to highlight three key lessons from the project:

-

We learned that staff across the RAC can contribute to UX work. While UX is part of my job description and a focus of my work, the Digital Strategies Team has been engaging staff across the organization for several years now to incorporate UX concepts and strategies into project planning and evaluation. Our ability to bring UX research and testing into this project at every stage, and with organization-wide contributions, tells me that we have made important progress in distributing this expertise.

-

We learned that overlapping expertise between developers and UX practitioners allows us to be nimble and responsive. While focused on the UX testing, I also reviewed and internalized the frontend code; while focused on the coding, Hillel also reviewed and internalized the UX testing. The easy translation between these domains meant that we could effectively apply the Observer Team’s insights, communicate clearly with the project team and stakeholders, and comprehensively address issues that cut across multiple domains like web accessibility.

-

We learned that the earlier we can test designs with real data the better. We were already far along with our design concept before we tested that design with our real archival data, and that data revealed some usability issues we had not anticipated because we had previously only tested the design treatment prototypes with mock records. This was not a huge loss, but a good lesson to connect a concept with actual data as soon as possible.

Next steps

We will continue to improve DIMES! We have lists of adjustments and enhancements we’d like to implement, and those changes will likely necessitate more testing with users. I would also like to include participants who use assistive technology like screen readers in our testing; the sudden COVID-19 pivot to remote testing created some technological challenges there, but I’d like to tackle those challenges.

Finally, we will continue to build our UX capacity and maturity at the RAC. We have demonstrated that user research and testing can be done quickly and effectively with clear benefits, and will continue to expand that knowledge and capacity.